TLDR: The search engine is here - wholeearth.surf

I'm building a search engine on top of https://t.co/miPS5VZAnU - The digitized repository of the Whole Earth publications by @stewartbrand

— Aaren (@aarenstade) November 3, 2023

These are a treasure trove and thought a search tool would be the perfect project.

Here's a video of my progress! pic.twitter.com/16CGdUNUQq

If you haven't heard of Stewart Brand or The Whole Earth Catalogs, then you're about to discover something very interesting.

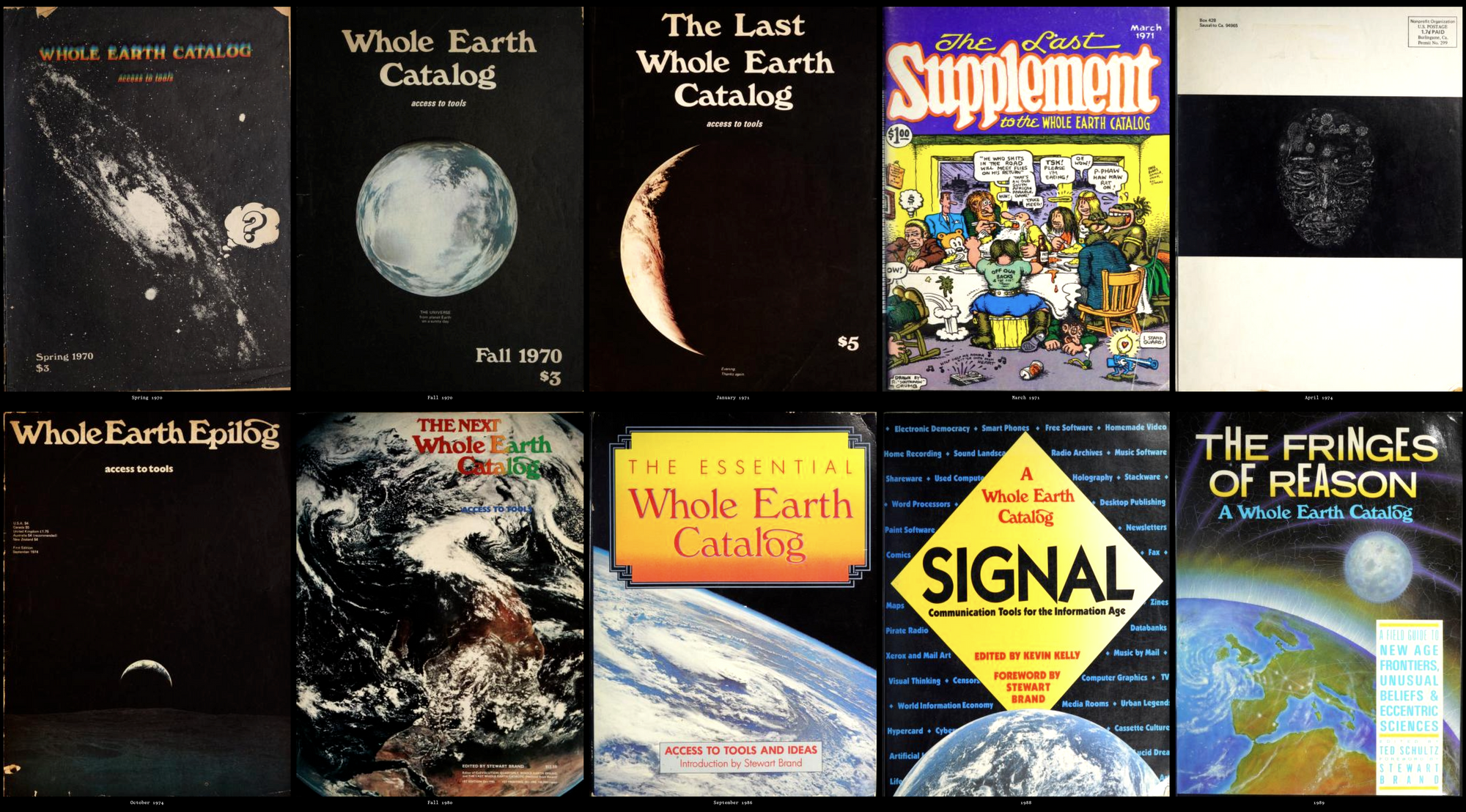

The Whole Earth Publications were a series of over 136 catalogs, published from 1970 to 2002, which covered and reviewed a wide variety of products, books, and ideas. In part it spawned out of the 1960s cultural revolution (through Stewart Brand), and I would describe the catalogs as having a strong shared philosophy.

Wikipedia describes "The editorial focus was on self-sufficiency, ecology, alternative education, "do it yourself" (DIY), and holism, and featured the slogan "access to tools"." The publications had a huge impact on counterculture movements in the 1970s and inspired technological movements through the late 20th century to now.

They are a treasure trove of ideas which I find deeply interesting, but across the publications there are ~21,000 pages, and so I thought a fun project would be to build a search engine!

This post is more of a technical overview, but feel free to check it out here!

Step 1 - Scrape the Publications

A small team had digitized the publications and hosted at wholeearth.info so first I had to collect all the catalogs in PDF form.

Total of 21,028 pages!

With all this data, next was processing the text.

Step 2 - Handling the Text

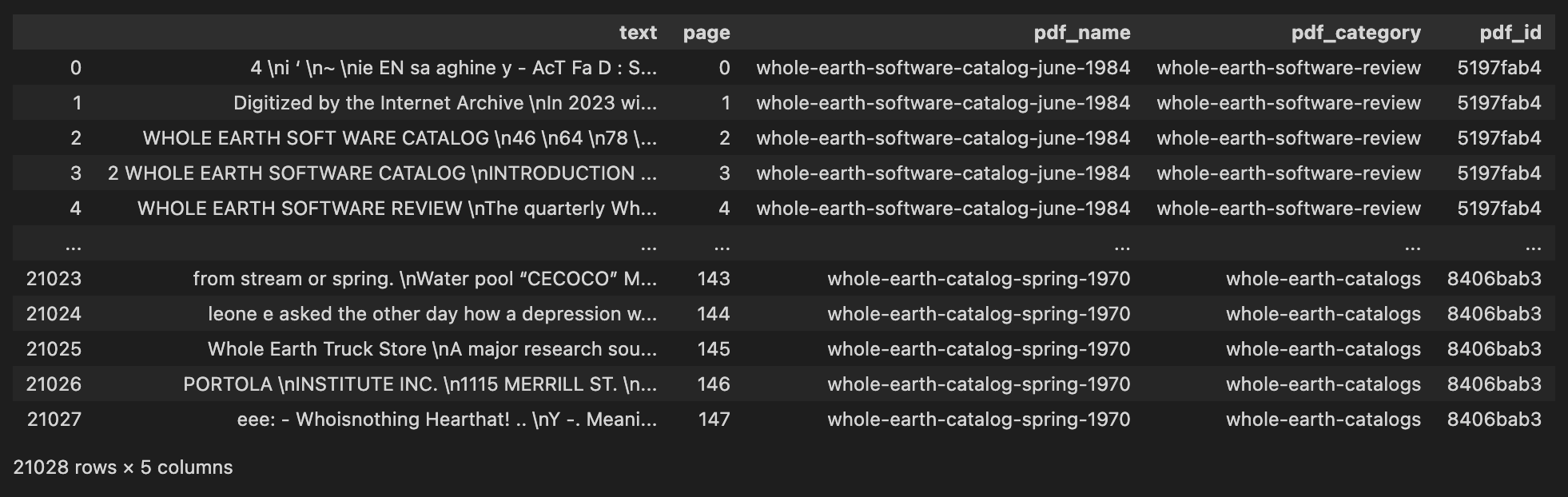

Because these PDFs come from photo scans, the text information can be very messy. Here is a side by side comparison of a randomly picked page.

While it's possible to build a search engine using this data, there's a lot of noise. This would likely mean many incorrect, missing, or generally confusing results. Because these are catalogs, every page is about many different things, and so the text on the page is mixed. Also, some writing is stylized or esoteric, and might not match with simpler queries.

So I decided to run every page through GPT to summarize, identify key topics, and create tags. This would simplify the text and create a cleaner database to search against.

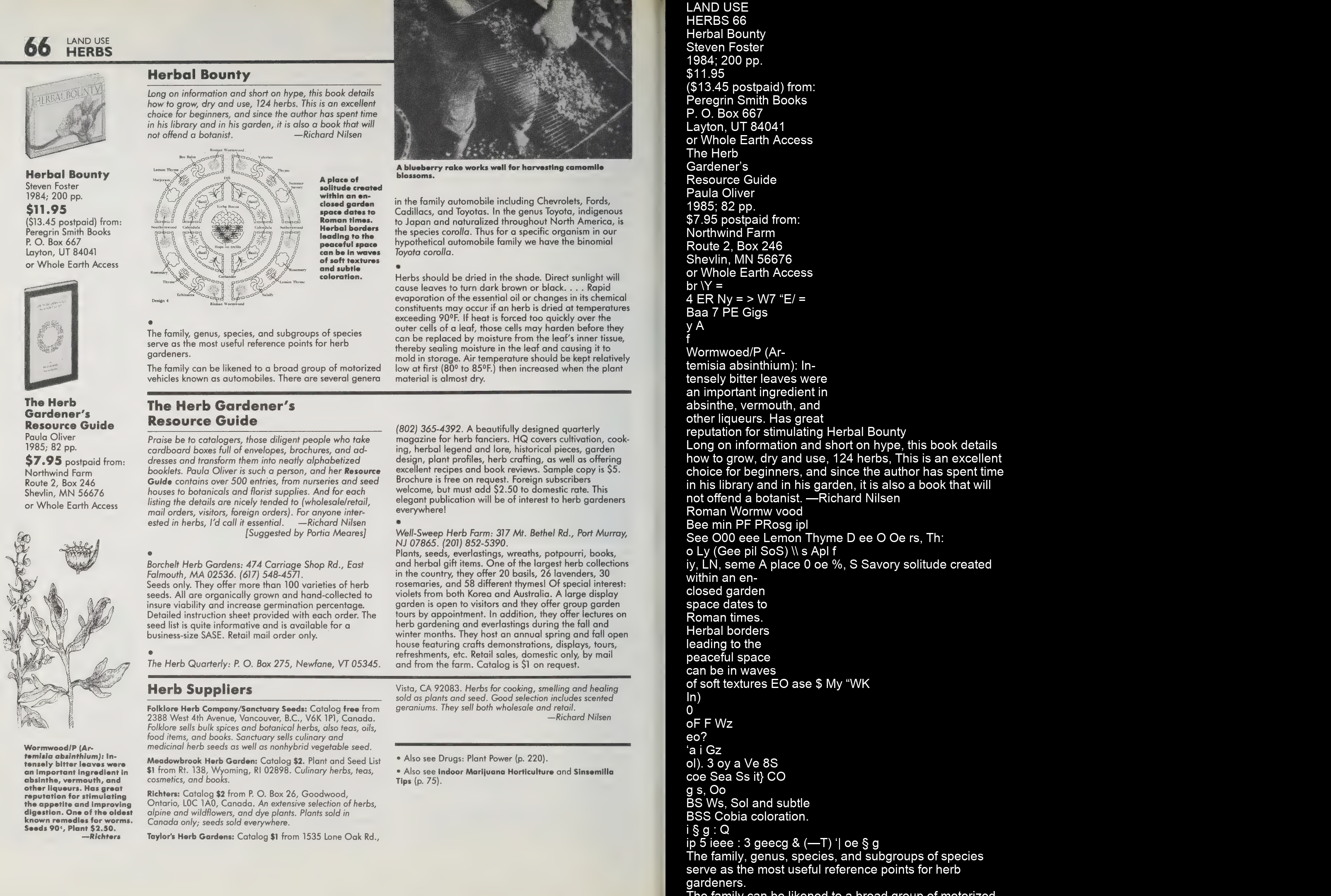

The challenge was processing everything fast enough and cheap enough. Below is a rough cost estimate with GPT-3.5 and GPT-4.

So I went with GPT-3.5. Unfortunately it was inevitable to lose some info because the input text was so messy and GPT-3.5 is not the strongest. For example names and titles were sometimes missed, but overall this method captured a lot.

So I setup a pipeline to process every page, which consisted of:

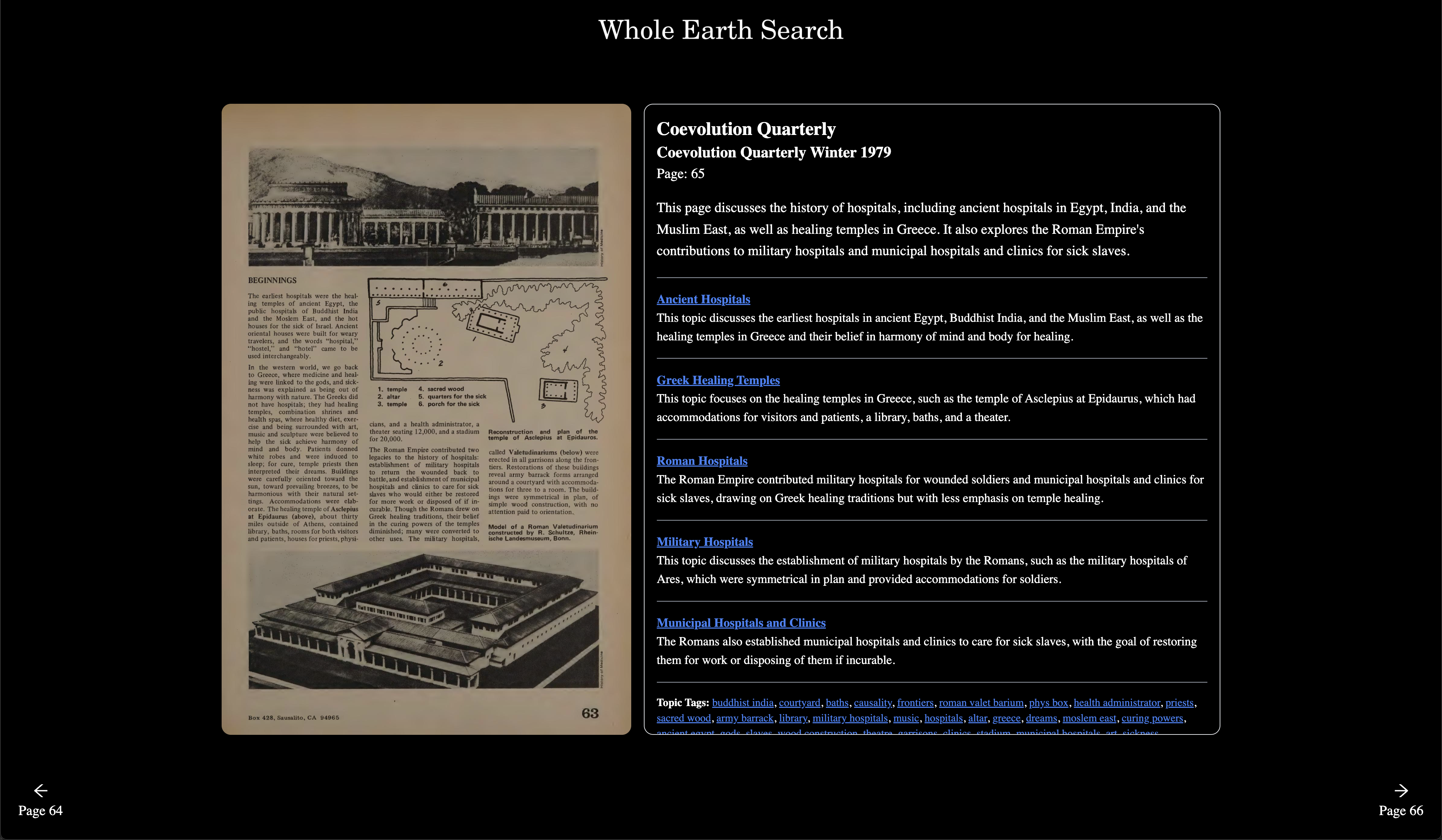

- Creating paragraph and sentence summaries of the page.

- Extracting key topics discussed in the page, with a summary of each.

- Extracting tags for topics, titles of things, and names of people.

I then ran this concurrently for every page, and with GPT-3.5's token rate limit, managed to process the Whole Earth Catalog (the first collection with 3,374 pages) in 6 hours for $18.00!

This was the result of first processing of Whole Earth Catalogs (3374 pages).

So after doing that for all 21,000 pages (which took a week longer than I expected haha), the total cost was somewhere around ~$95. Higher than my estimate because I generated more text than estimated for.

But that gave me clean text information to search against!

Step 3 (going on a tangent) - Extracting Images

Around this time (Nov 2023) multi-modal models that could describe images were coming out, I was experimenting with LLaVa (a local image to text model) and I thought it would be great to search against the images in the publications as well.

At the moment this is not a completed feature, but here's some of my experimentation.

I tested LayoutParser which exposes some local document models to detect page layout. Unfortunately I got mixed results using this. Seemed like the the models can't handle how dynamic these pages are.

Below are some LayoutParser model comparisons with a test page (raw page images shown here but I did some preprocessing (thresholding, alignment, etc) before running them)

Here's some comparison of thresholding methods to see if this could help me detect image areas in other ways.

Eventually I landed on an odd combination of methods:

- Using both LayoutParser and Tesseract OCR bounding boxes to crop the images.

- Passing the cropped images through a series of convoluted checks and filters to remove junk.

This sort of works, and I ran it on all the pages, but I'd like to find a better methodology. I'm keeping tabs with the world of Document AI to see new developments, and may experiment with training a custom model (if I can find the time!).

Step 4 - Making the text searchable

This is a relatively straightforward step as the ecosystem for searching large amounts of text is exploding and there's lots of tools available. You can read more about how this works here and here.

It all involves an embedding model (I used text-embedding-ada-002) and a vector database (I used Weaviate for this project).

Overall, it cost around $7.00 to create embeddings for everything (including the source page text)!

Whenever a search query comes in, you embed it (optionally with some restructuring or rephrasing to the search query), and perform a similarity search against your vector database.

You can add some metadata to the results, so when they come back you can reference the source of where the matched text comes from.

Step 5 - Creating the UI

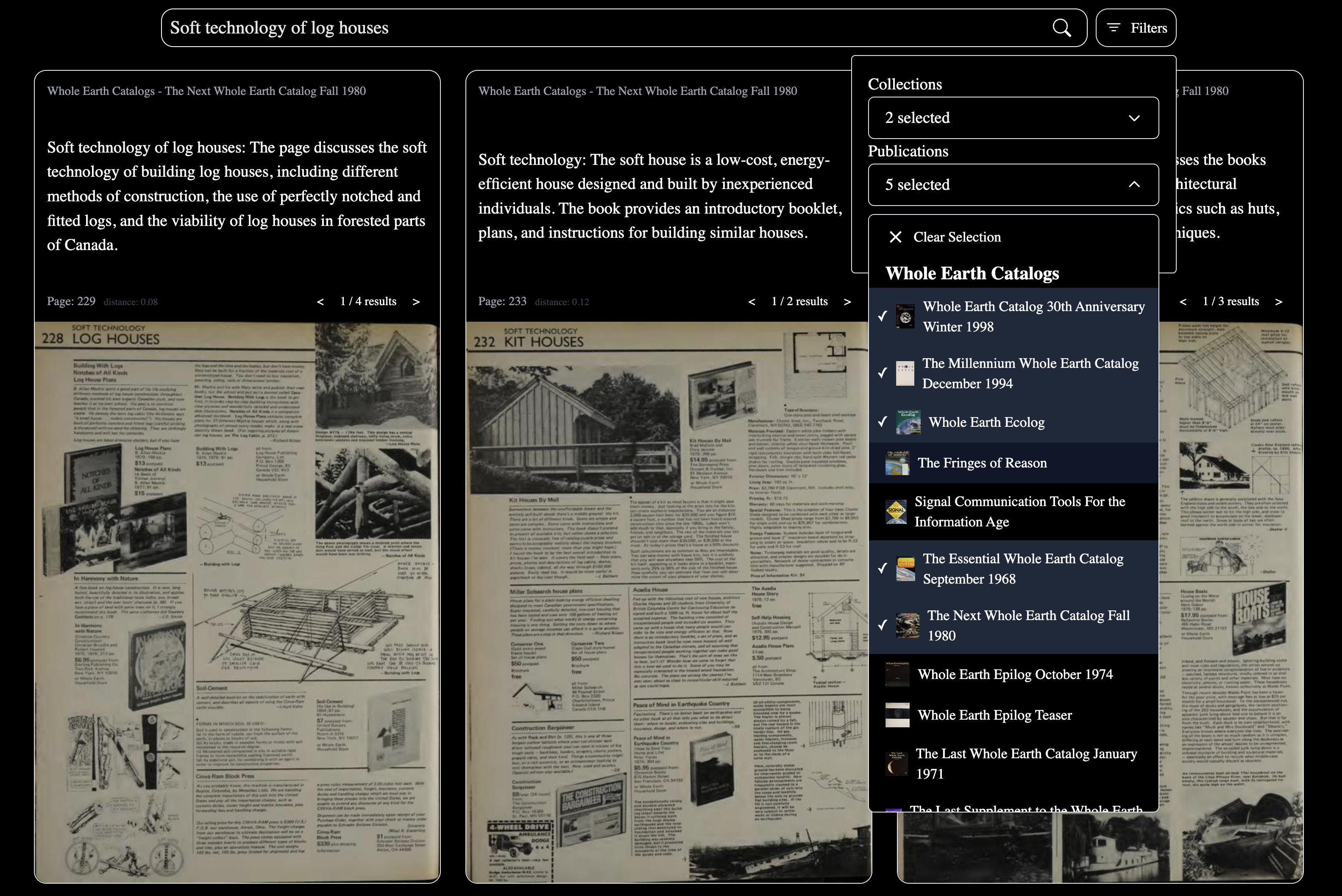

I build the website using Typescript NextJS over the course of a week or so. It allows you to search, filter your searches, and click on pages to view more info.

Conclusion

That's basically it!

This was a very fun project to build, you can check it out at https://wholeearth.surf

Thanks for reading :)